Using Keycloak and Kafka Stream to Detect Identity Anomalies

“What if you could detect identity fraud in real time — before any actual damage occurs?”

In today’s security landscape, fraud detection is not optional — it’s essential. With the power of Keycloak's event system and Kafka Streams, we’ll build a lightweight, real-time fraud prevention pipeline to catch suspicious login behaviors like brute-force attempts or device spoofing.

In our previous article, Let’s Publish Keycloak Events to Kafka using SPI (plugins), we explored how to stream Keycloak events into Kafka. Now, we’ll take it further — by processing those events in real time to flag login anomalies.

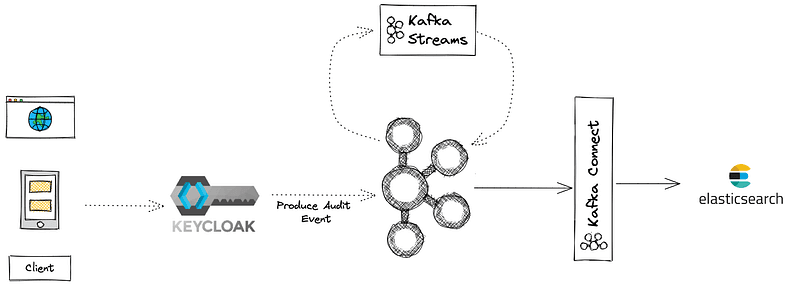

🔧 Architecture Overview: Real-time Fraud Detection

Step-by-step breakdown:

- A client attempts to log in through Keycloak.

- Keycloak emits a login event to Kafka (via our custom SPI).

- Kafka Streams processes login and error events to:

- Detect new or suspicious devices.

- Monitor failed login attempts.

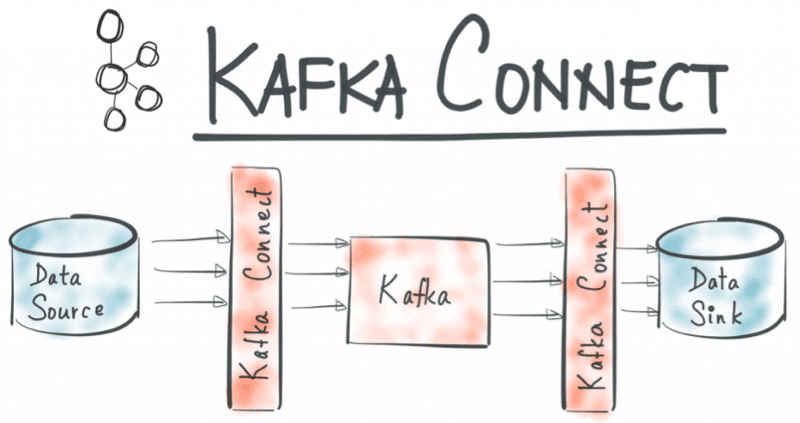

- Kafka Connect ships flagged anomalies to Elasticsearch, where Kibana handles visualization.

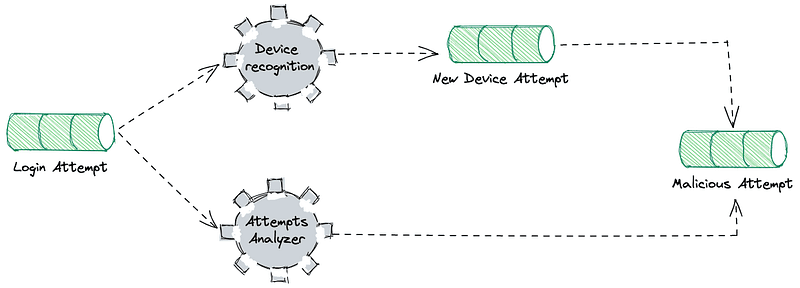

🔍 Two Building Blocks of the Detection System

1. Device Recognition Block

Flags unknown devices by comparing current login fingerprints to a stored set.

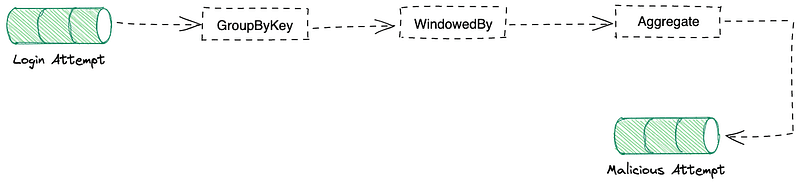

2. Attempts Analyzer Block

Aggregates login errors in time windows to detect brute-force attempts.

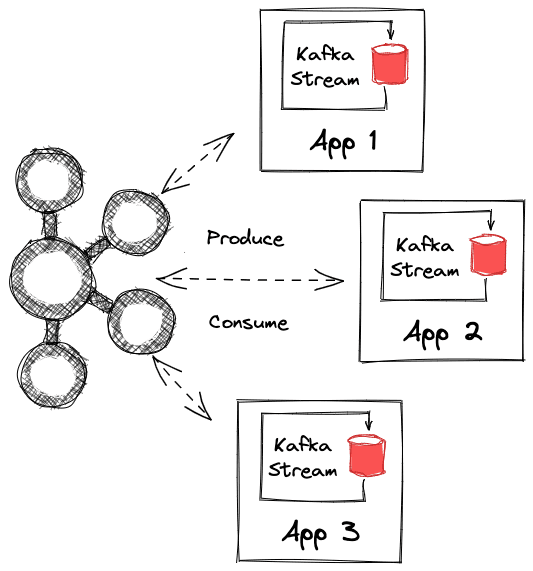

💡 What is Kafka Streams?

Kafka Streams is a lightweight client-side stream processor built on Kafka. It allows you to build stateless or stateful event processors using Java or Scala.

⚠ Kafka brokers do not perform computation. All stream logic runs in the Kafka Streams application.

🔍 Filtering Login Events

We filter audit event records to extract just LOGIN and LOGIN_ERROR types before further analysis:

// Pseudo-code example

stream.filter(

(event) => event.type === "LOGIN" || event.type === "LOGIN_ERROR"

);

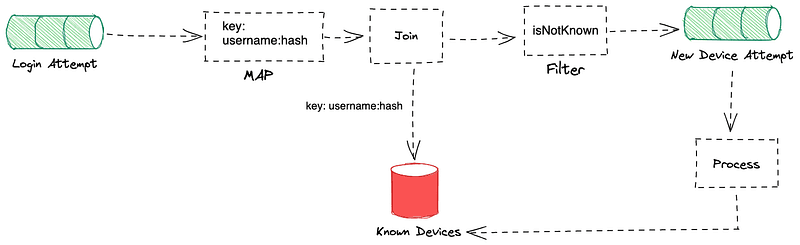

🧠 Device Recognition System

We detect unfamiliar devices by joining login attempts (KStream) with a table of known devices (KTable).

const deviceKey = `${event.username}:${hashDevice(event.deviceInfo)}`;

Device Hashing Example

function hashDevice({ os, version, deviceName, isMobile }) {

const raw = \`\${os}|\${version}|\${deviceName}|\${isMobile}\`;

return Buffer.from(raw).toString("base64");

}

If the join yields null, the device is new and may be flagged for review.

⚠ Attempts Analyzer

To detect brute-force attacks, we count the number of failed logins within a sliding window:

loginFailuresStream

.groupByKey()

.windowedBy(TimeWindows.ofSizeWithNoGrace(Duration.ofMinutes(5)))

.aggregate(

() => 0,

(key, event, count) => (event.type === "LOGIN_ERROR" ? count + 1 : count)

)

.filter((_, count) => count > 3)

.to("malicious-attempts");

✅ Real-World Use Case

If a user normally logs in from Paris, but suddenly there are 5 failed attempts from a device in Vietnam within 5 minutes — this triggers an alert.

📊 Visualizing Anomalies with Elasticsearch + Kibana

Until now, we’re detecting and flagging anomalies, but they live only in Kafka topics. For real insight, we use Kafka Connect to stream those into Elasticsearch.

⚙ Kafka Connect + Elasticsearch

Kafka Connect bridges Kafka and external systems. For Elasticsearch, we use a Sink Connector.

Sample Connector Configuration

{

"name": "elasticsearch-sink",

"config": {

"connector.class": "io.confluent.connect.elasticsearch.ElasticsearchSinkConnector",

"topics": "malicious-attempts",

"connection.url": "http://elasticsearch:9200",

"type.name": "_doc"

}

}

⚖ Trade-offs and Considerations

- Kafka Streams State Management can grow large with long time windows.

- You’ll need monitoring and storage management.

- For higher flexibility, consider exploring ksqlDB or Apache Flink.

✅ Conclusion

The entire code is available here: kafka-stream-fraud-java

We’ve built a production-ready architecture to detect suspicious logins using Keycloak events, Kafka Streams for processing, and Elasticsearch for visualizing attempts in real time.

🚀 In a future article, we’ll explore how to replace this setup using ksqlDB to reduce code complexity even further.

🙌 Like this post?

Feel free to share it with others or reach out if you want to collaborate on Kafka security use cases!